Multi-tenancy in Kubernetes enables the hosting of multiple tenants or applications within single or multiple clusters while ensuring logical and resource isolation. This capability allows organisations to efficiently manage and deploy various workloads on shared infrastructure, resulting in cost reduction and optimal resource utilisation.

Multi-tenancy brings benefits such as scalability, simplified management, agility, cost optimization, and collaboration while maintaining the isolation of separate infrastructure. However, it’s important to note that when multi-tenancy is implemented incorrectly, it can lead to the loss of many isolation benefits. By embracing multi-tenancy correctly, organisations can streamline operations, maximise resource utilisation, and foster innovation in their Kubernetes deployments.

In the context of multi-tenancy in Kubernetes, the definition of a tenant can vary depending on whether it refers to multi-team or multi-customer tenancy. In multi-team scenarios, a tenant is typically a team deploying a limited number of workloads that align with their service’s complexity. However, the definition of a team itself can be flexible, with higher-level divisions or smaller teams within the organisation.

The absence of native multi-tenancy in Kubernetes presents significant challenges and limitations. Without proper multi-tenancy support, fair resource allocation becomes problematic, potentially resulting in resource contention and poor performance for tenants. Moreover, the lack of secure isolation between tenants raises concerns about unauthorised access and potential security breaches that could impact the entire cluster.

To overcome these limitations, the goal is to achieve robust multi-tenancy in Kubernetes by implementing effective isolation mechanisms, stringent resource management, and security measures. By doing so, efficient resource utilisation, tenant separation, and enhanced cluster security can be ensured, addressing the inherent limitations of Kubernetes’ native support for multi-tenancy.

In our analysis, we focus on three key aspects of Kubernetes multi-tenancy frameworks:

- Multi-tenancy approach

- Customization approach

- Management of tenant resource quotas

These aspects offer valuable insights into the diverse features and capabilities offered by the frameworks.

Multi-tenancy Approach

When it comes to achieving multi-tenancy in Kubernetes, organisations have the flexibility to choose from three primary models:

Each model offers distinct approaches to managing multiple tenants or applications within a Kubernetes environment, addressing various needs for isolation, resource utilisation, and management complexity.

Multi-tenancy through Multiple Clusters

Multi-tenancy through Multiple Clusters is a model in which each tenant or application is hosted in its own separate Kubernetes cluster. This approach provides complete isolation and resource control for each tenant, ensuring that their workloads operate independently. It offers strong fault tolerance as any issues or failures in one cluster do not affect the others.

However, this model also introduces additional management overhead, as each cluster needs to be individually configured, monitored, and maintained. While this approach provides robust isolation, for our analysis, we will not examine it in detail.

Multi-tenancy through Multiple Control Planes

In this model, separate control planes are created for each tenant or application within a single Kubernetes cluster.

To support the tenant control planes, one or more nodes are dedicated for that purpose. Within each control plane node, pods isolate one tenant’s control plane from another’s. Using pods for isolating control planes imposes less overhead compared to using virtual machines (VMs). There are variations to this approach, where some control plane components, like the scheduler, are shared among tenants, while others, such as the API server and database, are duplicated to provide one instance for each tenant. In any case, this approach grants each tenant a complete view of its control plane, allowing customization of their environment.

The approach taken by frameworks varies in terms of isolating tenant workloads from each other. When tenants share a common set of worker nodes, as seen in Vcluster, the level of isolation differs compared to situations where each tenant has its own dedicated set of worker nodes, such as in Kamaji. In the latter case, better isolation is achieved.

Vcluster , developed by Loft, is an open-source framework that follows a multiple control plane approach. Each Vcluster in the control plane has a separate API server and data store. Workloads created on a Vcluster are copied into the namespace of the underlying cluster and deployed by the shared scheduler. An architecture diagram illustrating the Vcluster can be found in the following link .

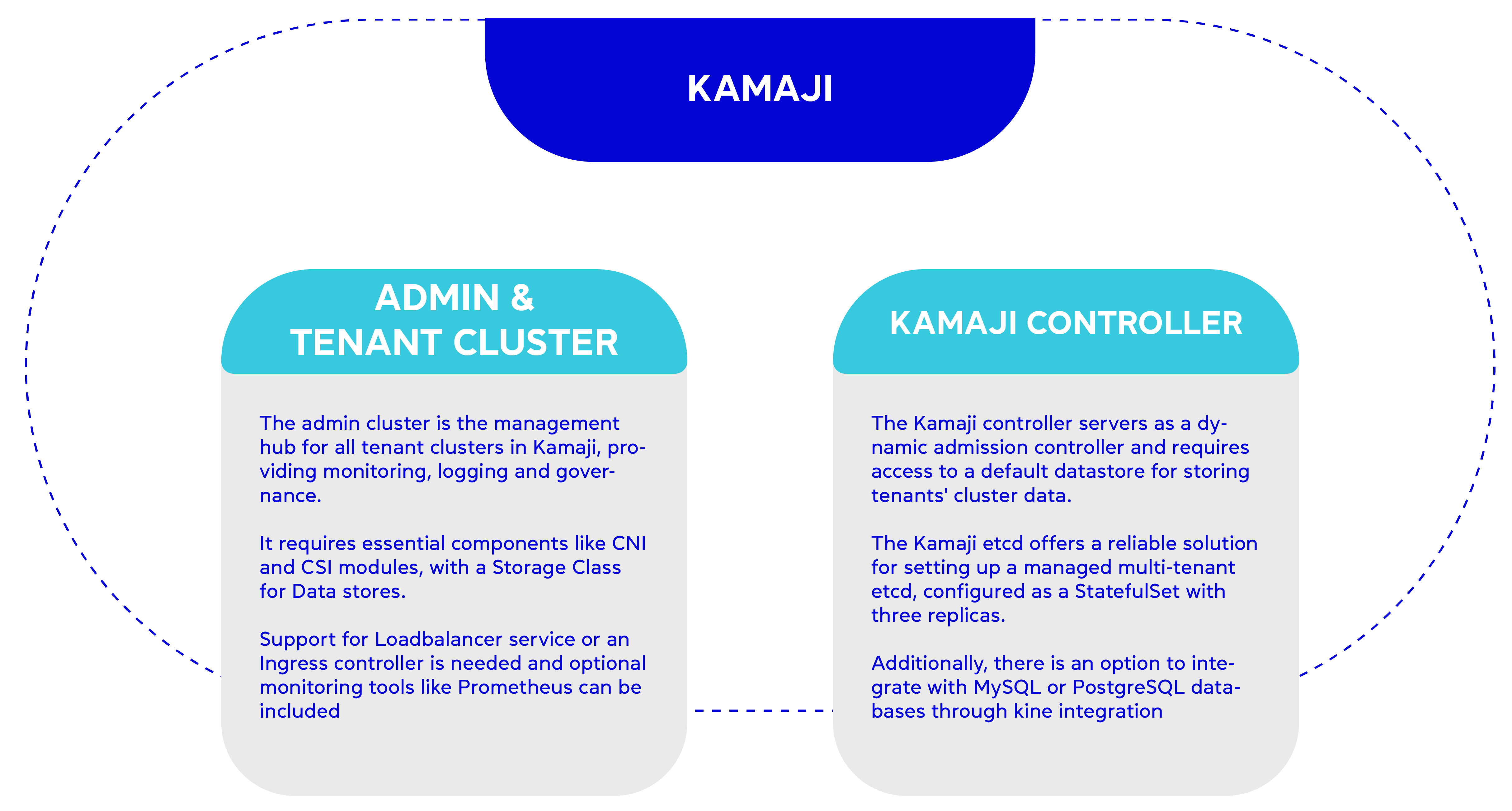

Kamaji is one of the open-source frameworks developed by Clastix Labs which follows the multiple control plane approach. Kamaji enables Kubernetes multi-tenancy by running tenant control planes as pods on a common cluster known as the admin cluster.

Each tenant is assigned its dedicated worker nodes. Isolation between worker nodes on the same machine is achieved through VMs, although this introduces higher overhead compared to isolation through pods. An architecture diagram illustrating the Kamaji can be found in the following link .

Multi-tenancy through Shared Control Plane

Multi-tenancy through a shared control plane involves multiple tenants or applications sharing the same Kubernetes control plane. Control plane isolation is ensured through a logical entity, such as Kubernetes namespaces, that introduces negligible overhead, but provides less control plane isolation compared to a multiple control plane approach.

The Shared Control Plane approach offers lower operational costs. Additionally, the Shared Control Plane approach is more lightweight for workload mobility, enabling faster pods to spin up and spin down with reduced overhead compared to the multiple control plane approach.

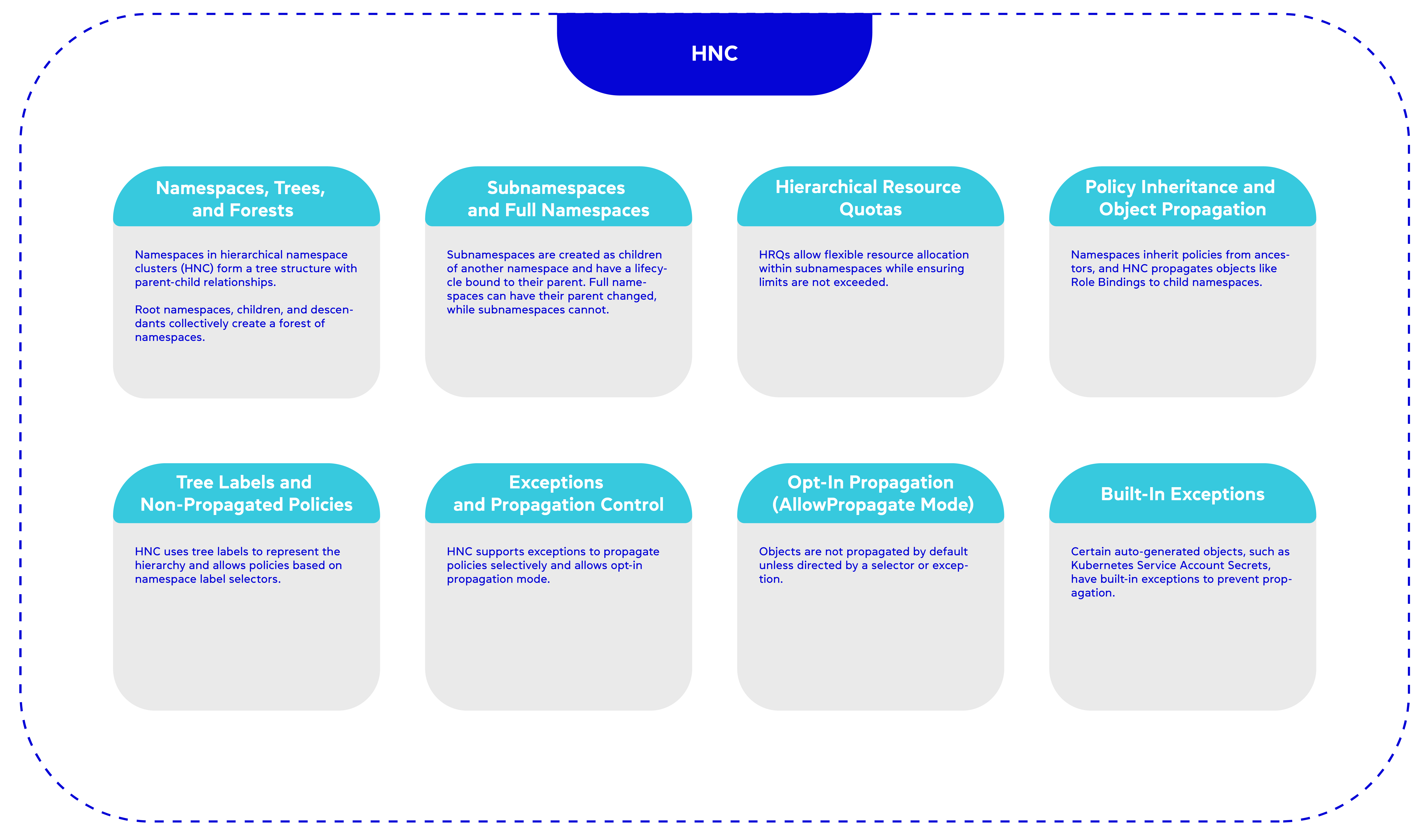

The Hierarchical Namespace Controller (HNC) is an open-source framework developed by the Kubernetes Multi-Tenancy Working Group , following the shared control plane approach. HNC utilises a hierarchical namespace structure to enable multi-tenancy, providing features such as policy inheritance and object replication across namespaces based on the hierarchy.

However, there are certain drawbacks associated with HNC:

- HNC does not enforce unique namespace names, which introduces the possibility of namespace conflicts.

- The quota management system in HNC is not aligned with the hierarchical namespace structure. As a result, it becomes challenging to restrict the resource quotas of child namespaces based on their parent namespaces’ quota (under development HNC v1.1).

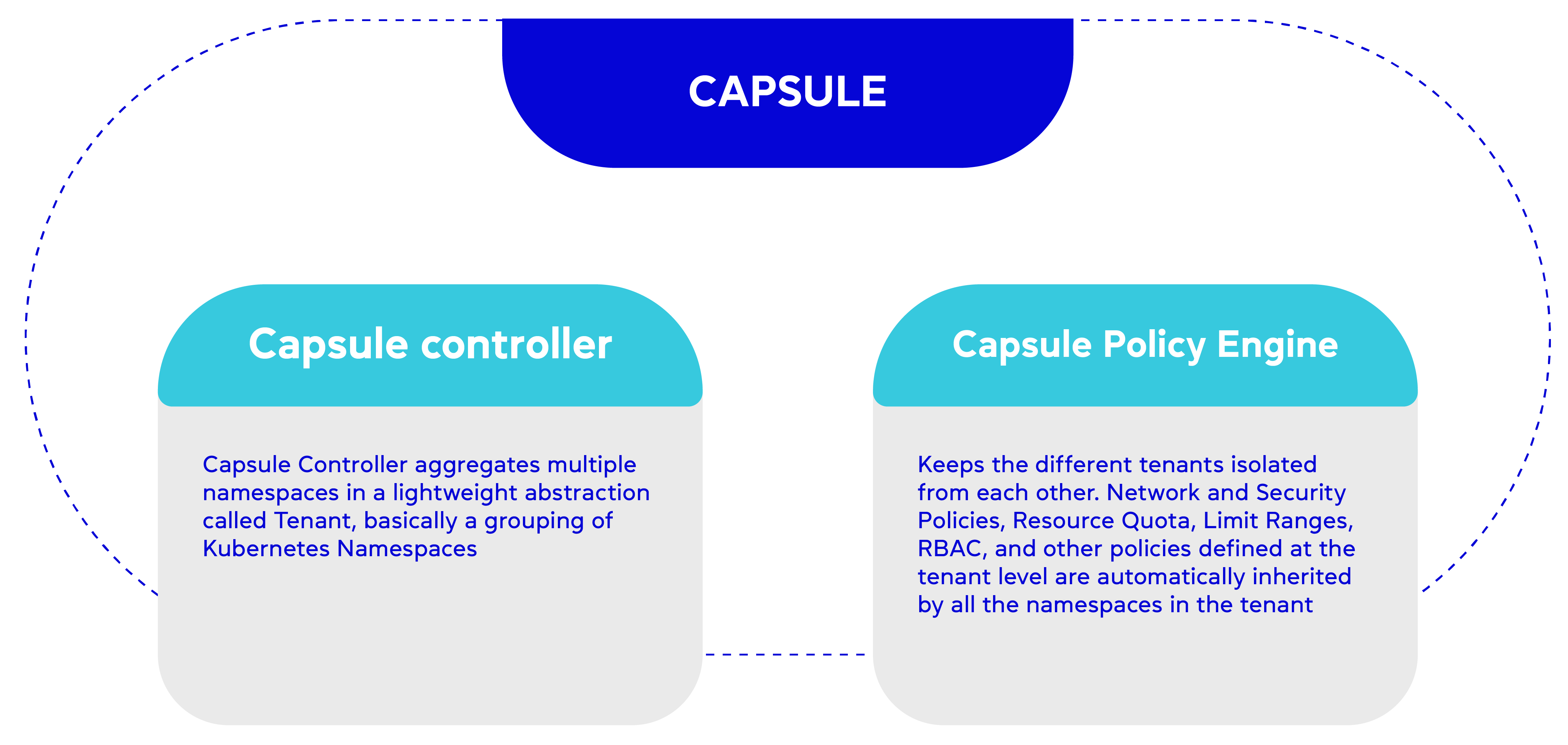

Capsule , developed by Clastix Labs, is an open-source framework that uses a shared control plane approach. It adopts flat namespaces as its customization approach, allowing tenants to create resources that can be replicated across their namespaces. Additionally, the cluster administrator can copy resources among different tenant namespaces.

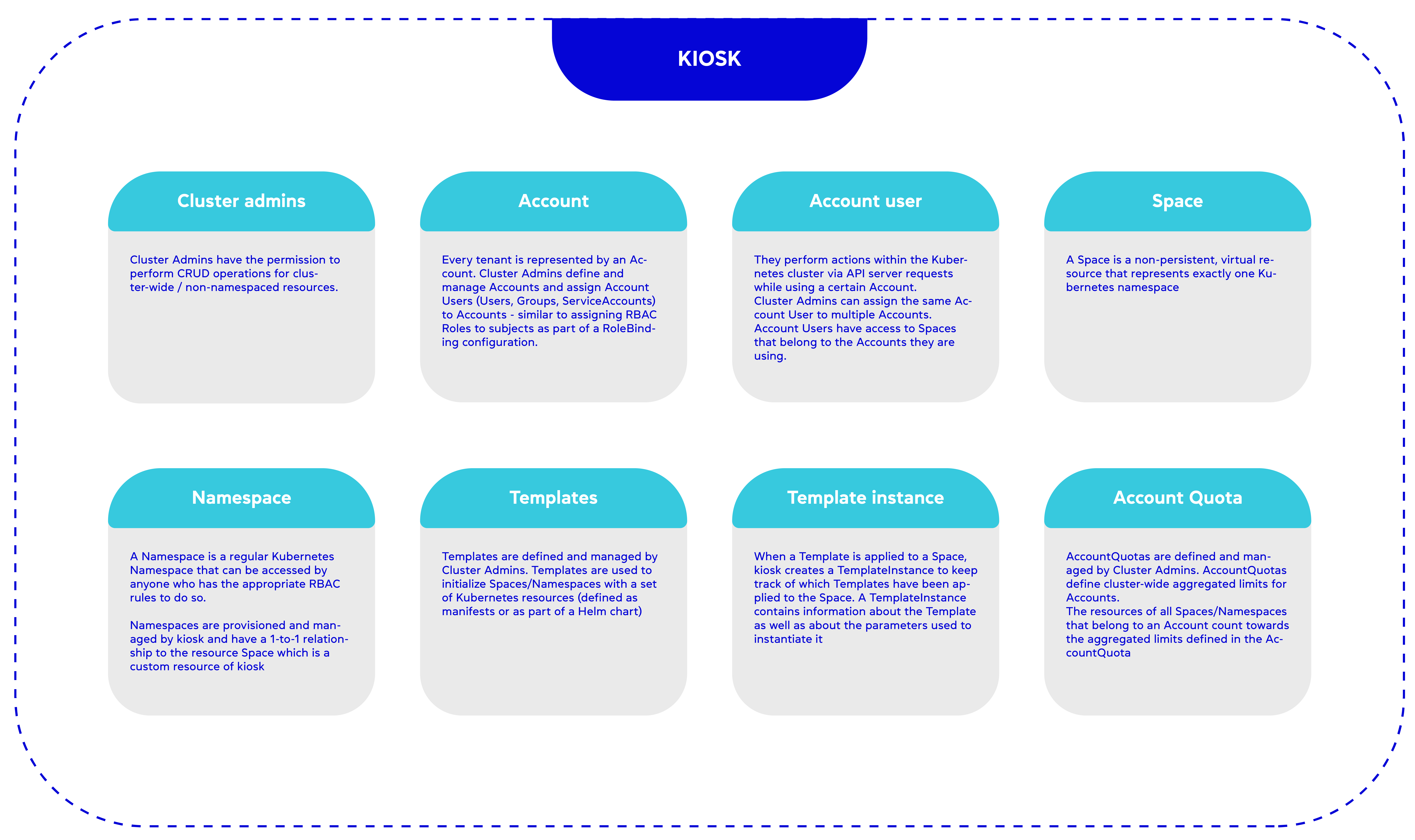

In addition, Kiosk , developed by Loft, follows a shared control plane approach. It utilises a flat namespaces approach for customization. In this framework, a tenant is represented by an abstraction called an account, and each account can create a namespace through a space entity. Templates can be prepared to automate the provisioning of resources within these namespaces during creation. While this approach helps simplify management complexity, it shares the limitations of Capsule due to the use of flat namespaces. An architecture diagram illustrating the Kiosk can be found in the following link .

Comparing Multi-Tenancy Approaches

The following table seeks to compare the three different multi-tenancy solutions within the Kubernetes ecosystem. Each solution offers distinct advantages and trade-offs, catering to varying use cases and organisational needs.

Customization Approach

Each tenant should have a degree of autonomy to create and delete the environments in which its workloads can be deployed; obtain resource quotas and assign them to those environments; designate users for the environments, assign roles to those users, and grant permissions based upon those roles.

By giving each tenant its own control plane, which the tenant’s administrator can use to configure its environments as they wish, the multi-cluster frameworks provide the greatest flexibility (Vcluster, Kamaji).

In frameworks that follow the shared control plane approach, some extensions to Kubernetes are required to safely enable customization. This is because in standard Kubernetes, giving a tenant’s administrator the permissions necessary to configure their own environments means giving them the ability to configure other tenants’ environments as well.

We categorise the shared control plane into two distinct categories:

Hierarchical Namespaces

This category requires more development work. You have to provide controllers that keep track of the relationships between namespaces, such as several namespaces all belonging to the same tenant. Management of tasks such as the approval of new namespaces, and the modification of quotas, users, etc., can be delegated to each tenant’s administrators, and, further down the hierarchy, to sub-tree administrators. To achieve this goal, it is necessary to develop custom controllers.

Flat Namespaces

Capsule and Kiosk, is to follow the standard Kubernetes approach, in which each namespace exists independently of every other namespace. The flat structure does not express any relationships. For example, no mechanism provides for aa to inherit from a. If they are to share configuration parameters, this needs to be expressly requested by the common administrator of the two namespaces. There are efforts to solve this issue through configuration templates to be applied to multiple namespaces. Nevertheless, as the number of namespaces that a tenant has grows, it results in management complexity for the root admin of this tenant, which makes it challenging to keep track of independent namespaces.

You can find the instructions for setting up the Capsule framework by following this link and for Kiosk by following this link .

Tenant resource quota allocation

Resource quotas serve as a fundamental mechanism for organisations to charge their tenants based on resource usage. They also play a crucial role in situations where resources are limited, ensuring fair distribution among different teams or tenants. In traditional manual setups, administrators manually define resource quotas per namespace to allocate resources among teams within the organisation. However, for a multi-tenancy framework built on Kubernetes, automation of this process becomes essential to achieve scalability. By automating resource quota management, the framework can effectively handle the dynamic allocation of resources and accommodate a growing number of tenants or teams.

To add resource quotas on a single Vcluster, the administrator can follow these steps :

Create a ResourceQuota object: Define the maximum resource limits for the Vcluster by specifying the allowed CPU, memory, and pod count. For example, using the YAML configuration provided, you can set a limit of 10 vCores, 20GB of memory, and a maximum of 10 pods.

Configure a LimitRange: To further ensure resource allocation within the Vcluster, you can set a LimitRange. This range defines default and defaultRequest values for memory and CPU limits of containers within the Vcluster. It helps ensure that containers without explicit resource requests and limits receive appropriate default values.

By implementing these steps, the administrator can enforce resource quotas and define resource limits within the Vcluster, ensuring efficient resource utilisation and preventing overconsumption.

To configure resource quotas in the Kamaji framework, you can utilise a YAML file with the desired resource quota definition. The YAML file specifies the resource limits for CPU, memory, and other resources. By applying this configuration file, you can enforce resource quotas within Kamaji, ensuring that tenant workloads do not exceed the specified limits. For detailed instructions on how to set up the resource quota using the YAML file, please refer to the provided link .

Note that in both the Kamaji and Vcluster frameworks, the administrator is responsible for manually managing the subnamespaces within each framework. This is because the resource quotas are applied at the Vcluster or Kamaji namespace level, rather than automatically propagating to the subnamespaces. As an administrator, you will need to handle the allocation and enforcement of resource quotas for individual subnamespaces within the respective frameworks.

To use quotas in the Kiosk framework, you can utilise AccountQuotas to set limits for an Account, which are then aggregated across all Spaces within that Account.

AccountQuotas in Kiosk allows you to restrict resources similar to Kubernetes ResourceQuotas. However, unlike ResourceQuotas, AccountQuotas are not limited to a single Namespace. Instead, AccountQuotas aggregate the resource usage across all Spaces within an Account and compares it to the defined limits in the AccountQuota.

If multiple AccountQuotas are referencing the same Account through the “spec.account” field, Kiosk will merge the quotas. In cases where different AccountQuotas define different limits for the same resource type, Kiosk will consider the lowest value among them.

To apply quotas in Capsule, the following steps can be followed:

- Define the resource quotas for each namespace within the tenant’s specification. This can be done by creating a YAML file and applying it to the cluster. The YAML file should include the desired quota limits for resources such as CPU, memory, and pods.

- Specify the quota limits within the “spec.resourceQuotas” section of the YAML file.

- Apply the YAML file

- Once the quotas are in place, the tenant can create resources within the assigned quotas.

To implement hierarchical quotas using the Hierarchical Namespace Controller (HNC), the following steps can be followed:

Create a HierarchicalResourceQuota (HRQ) in the parent namespace (e.g., namespace team-a) to define the overall amount of resources that can be used by all subnamespaces within the hierarchy.

The HRQ ensures that the sum of resources used by all subnamespaces does not exceed the configured limit in the parent namespace. This allows team-a to flexibly use resources in any of their subnamespaces without exceeding the allocated amount.

The resources allocated to team-a are equally shared between the applications running in their subnamespaces, resulting in efficient resource utilisation.

To enable individual teams or organisations within the hierarchy to create their own hierarchical quotas, a “policy” namespace can be inserted above each level. This allows sub-admins to create quotas in lower-level namespaces while adhering to the overall HRQ configured at higher levels.

The HRQ hierarchy follows a strict rule where lower-level quotas cannot override more restrictive quotas from ancestor namespaces. The most restrictive quota always takes precedence.

You can create the HRQ CustomResource by applying a YAML file to the cluster. Usage information can be obtained using commands like “kubectl hns hrq” or “kubectl get hrq”. Refer to the quickstart example for further guidance.

The implementation of hierarchical quotas automatically creates ResourceQuota objects in each affected namespace. These objects are managed internally by HNC and should not be modified or inspected directly. You can use the “kubectl hns hrq” command or examine the HierarchicalResourceQuota object in the ancestor namespaces for inspection.

When defining quotas in HRQ, it’s important to note that decimal point values are not supported. Instead, you can use string representations or milliCPU values for precise resource allocation (e.g., “1.5” or “1500m” for CPU).

By leveraging HNC’s hierarchical quotas, teams and organisations can fairly and securely distribute resources among their members while adhering to overall resource limitations set by higher-level namespaces.

Note: The Hierarchical Resource Quotas (HRQ) feature in the Hierarchical Namespace Controller (HNC) is currently in beta as of HNC version 1.1. While HRQ provides advanced resource allocation capabilities within namespaces and their subnamespaces, it’s important to be aware that this feature is still undergoing development and may have limitations or potential changes in future releases