How we successfully keep multi-tenanted production clusters up to date.

CECG engineers have been running production Kubernetes clusters for one of our clients for over 8 years now. For context, that’s roughly around the time of the Kubernetes-v1.0.0 release and, as of the time of writing, Kubernetes-v1.28.0 is the latest GA version!

Those production clusters support several thousand developers split across several hundred development teams who are all aiming to get their latest builds out to users.

To take advantage of new features and bug fixes, we must regularly upgrade the version of Kubernetes that those clusters run. Upstream Kubernetes and the various Managed Kubernetes services (EKS, GKE, AKS) have specific support lifecycles which we need to adhere to to ensure ongoing support assistance.

This article details our experiences of trying to achieve the above, issues we’ve hit along the way, and some suggested timelines and approaches you may want to consider for your own clusters.

Tradeoff: Kubernetes Feature Availability vs. Upgrade Cadence

When a new version of Kubernetes deprecates or removes a particular API, all consumers of that API need to be updated. An example was in v1.22, where a lot of long-deprecated APIs were removed, including extensions/v1beta1 and networking.k8s.io/v1beta1 versions of ‘Ingress’.

In order to help development teams onboard onto our clusters as quickly and easily as possible, we have developed some custom deployment tooling so that they don’t have to write Kubernetes manifests directly. In addition, it ensures that teams comply with certain policies (security requirements, PodDisruptionBudgets, etc.) by default, all of which help us to keep our clusters performant and running smoothly. On the face of it then, this would seem like an ideal scenario; we have a single consumer of the Kubernetes APIs and we, as authors of that deployment tool, can update it as and when those APIs are deprecated and removed. However, there are two important caveats:

- Tenants need to upgrade the version of the deployment tool to pick up those API changes. Admittedly, this should be a much easier request to see completed than having to update potentially many manifests, but we still ultimately have a dependency on an external team.

- Tenants aren’t obligated to use the deployment tool. One of our primary design philosophies is that we should strive to give our tenants the ability to utilise any Kubernetes feature they wish to get their job done. Within reason, of course! If we were to make the deployment tool the only way in which tenants interact with the clusters, they would be unable to experiment with new features until we were able to make time to update the tooling. Additionally, tenants may need to write their own manifests as an ’escape hatch’ in case there are specific reasons that the default security or PDB policies, for example, aren’t suitable for their particular application.

On balance, we believe that making new features available to tenants outweighs our dependency on them to facilitate migration to new Kubernetes APIs.

Upgrade Related Tooling

Until relatively recently, we had very limited visibility into how widespread deprecated and to-be-removed APIs were being used. Therefore, it was hard to gauge the impact that any particular upgrade was going to have. We have since adopted kube-no-trouble which analyses your clusters and shows any resources that are using APIs that have been deprecated or removed in future Kubernetes versions. Whilst it only provides text output suitable for ad-hoc runs out of the box, we integrated it with Prometheus using kube-no-trouble#302 almost verbatim. This has enabled us to get visibility of upgrade-blocking issues on a per-team and per-namespace level and provide tenant-facing dashboards so that they can easily monitor their compliance status.

Other Upgrade Considerations

Upgrades to Kubernetes may require changes to other components which necessitate pre-upgrade work. For example, 1.24 required a move away from the dockershim container runtime to an OCI-compliant runtime, e.g. containerd. Similarly, 1.27 requires the installation of a CSI storage driver when the equivalent in-tree storage plugin is removed. Even though these examples are unlikely to require coordination with tenants, such preparatory work can take significant effort. Hence, they need to be analysed and planned in, with plenty of lead time for the actual upgrade.

Upstream Kubernetes Support Schedule

Upstream Kubernetes releases receive approximately 12 months of support. Full details of the support schedule are available here .

However, if you are using an upstream Kubernetes release (i.e. you are operating “turnkey” clusters), you will also need to take into account Kubernetes’ version skew policy . This dictates how much difference there can be between the versions of the components that the control plane runs compared to the versions of the components that the Kubernetes nodes run.

In short:

- API servers: These can be between 1 minor version of each other in an HA setup; this is to support upgrades. e.g. You’re allowed to do a rolling upgrade such that API servers are on a mix of

1.28and1.27. But a mix of1.28and1.26is not allowed. - Nodes: From

1.25onwards must be within 3 minor versions ofkube-apiserver. e.g.kube-apiserveris at1.28, nodes are permitted to be running1.28,1.27,1.26, or1.25. Prior to1.25nodes must be within 2 minor versions ofkube-apiserver.

As you can see from the above, the skew policy allows for nodes to not be upgraded on every release. Whilst it is recommended to keep both nodes and API servers up to date, there may be circumstances that dictate otherwise. For example, if your clusters are sufficiently far behind the latest release, skip node upgrades in order to ‘catch up’ more quickly to supported versions.

Vendor Support Schedules

The table below consolidates data taken from the EKS Kubernetes Release Calendar and GKE Release Schedule at the time of writing. Its inclusion here is merely illustrative of the general support policy. For simplicity, we only consider the GKE ‘Regular’ release channel dates as that channel is recommended for most customers and its release/support dates more closely align with EKS for the most recent releases.

| Kubernetes version | Upstream release | AWS EKS release | GCP GKE (Regular) release | Upstream end of support | AWS EKS end of support | GCP GKE end of support |

| 1.27 | April 11, 2023 | May 24, 2023 | June 14, 2023 | June 28, 2024 | July 2024 | August 31, 2024 |

| 1.26 | December 9, 2022 | April 11, 2023 | April 14, 2023 | February 28, 2024 | June 2024 | June 30, 2024 |

| 1.25 | August 23, 2022 | February 22, 2023 | December 8, 2022 | October 28, 2023 | May 2024 | February 29, 2024 |

| 1.24 | May 3, 2022 | November 15, 2022 | August 19, 2022 | July 28, 2023 | January 31, 2024 | October 31, 2023 |

| 1.23 | December 7, 2021 | August 11, 2022 | April 29, 2022 | February 28, 2023 | October 11, 2023 | July 31, 2023 |

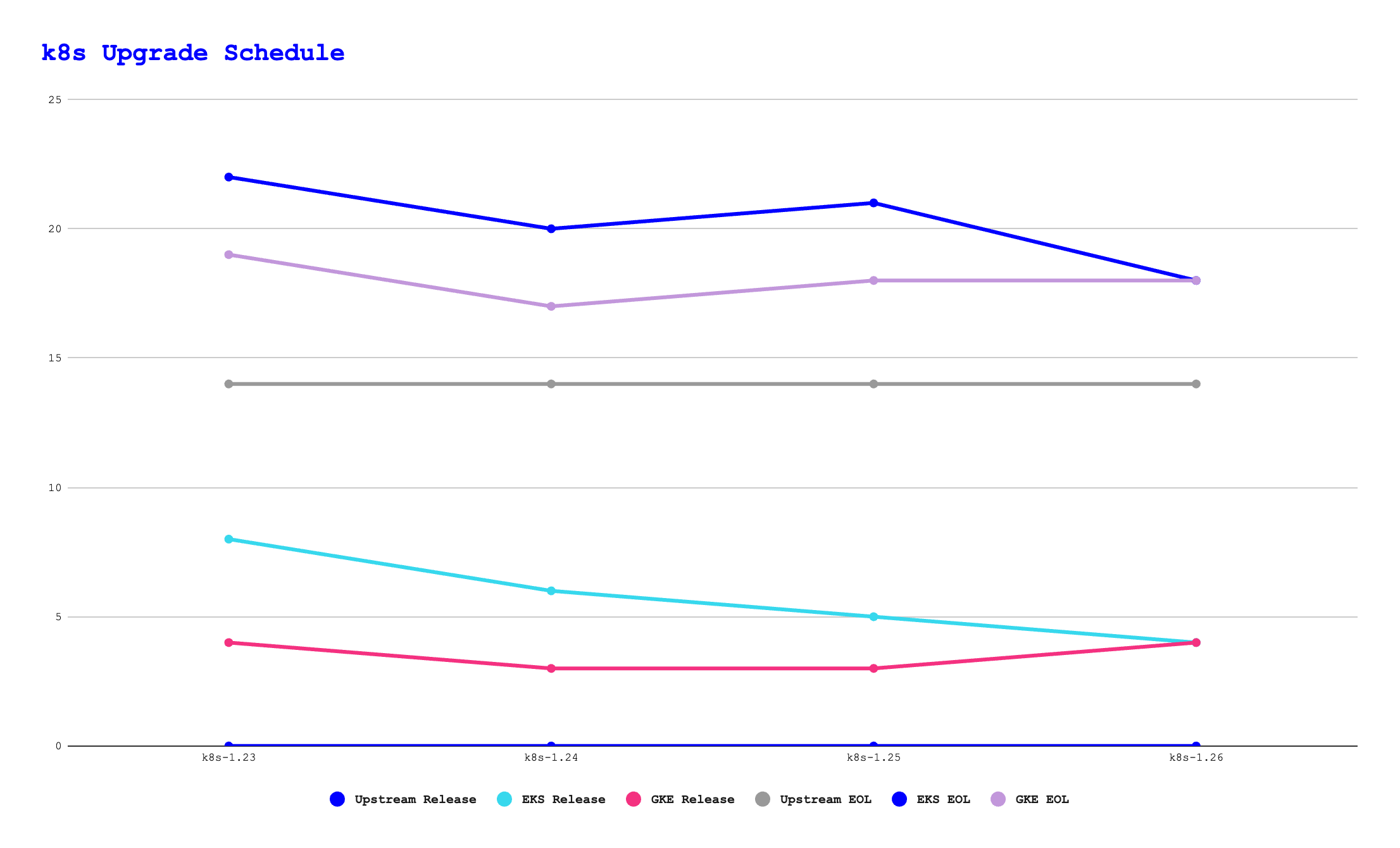

That, then, translates to the following elapsed time, in months, from the upstream release::

| k8s-1.23 | k8s-1.24 | k8s-1.25 | k8s-1.26 | k8s-1.27 | |

| EKS Release | 8 | 6 | 5 | 4 | 1 |

| GKE Release | 4 | 3 | 3 | 4 | 2 |

| Upstream EOL | 14 | 14 | 14 | 14 | 14 |

| EKS EOL | 22 | 20 | 21 | 18 | 15 |

| GKE EOL | 19 | 17 | 18 | 18 | 16 |

As you can see, the timespan between an upstream release and its adoption in EKS and GKE is somewhat variable. However, it looks like both EKS and GKE aim to have releases available within 6 months of an upstream release being made. Both vendors now support a given release for 14 months from it initially being made available, as does the upstream Kubernetes project.

Recommendations

- Weigh up the tradeoff between feature availability and dependency on tenants yourself. Some factors you may want to consider: a. Number and scale of your clusters b. Familiarity of your tenants with Kubernetes c. Willingness/agreement to break tenant deployment pipelines

- Specifically related to the last point above, consider adopting a contract that codifies expectations between you and your tenants when they onboard onto your platform. e.g. “We, as cluster operators, will provide you, the tenant, with x months’ notice to adapt to upstream Kubernetes API changes and provide support for those migrations. You, as a tenant, commit to adapting to such API changes within that time frame”. Having that agreement in place can be beneficial when escalating upgrade-related blockers with development teams.

- Announce API deprecations and removals as early as possible. The Kubernetes Deprecated API Migration Guide is updated ahead of each upstream release. Keeping a close eye on that and letting tenants know of changes well ahead of your planned upgrade date will maximise the time they have to get any required code fixes in place.

- Upgrade schedule: Bearing in mind all of the points above, and the various vendors’ release and support schedules, we’d currently recommend the following upgrade schedule; it’s designed to balance maximising longevity of support on any given release on the assumption you have both turnkey clusters and cloud-provider managed clusters as we currently do.

The schedule will provide for up to 10 months of running your “turnkey” clusters on an upstream-supported version of Kubernetes, and likely around 12-13 months of support on EKS & GKE clusters by the time you reach production depending on your own rollout timescales.

| Timescale | Action |

| Continuous | Monitor upstream Changelogs, blog posts, and deprecation guides for API deprecation and removal announcements. Cross-check with `kube-no-trouble` and coordinate with tenants to remove usage of those APIs. |

| Between "Upstream release -2 months" and "Upstream release + 3 months" | Ensure all upgrade prerequisites are met in production clusters. |

| Upstream release +4 months (depending on EKS + GKE availability) | Upgrade pre-dev clusters to upstream release |

| Between "Upstream release +4 months" and "Upstream release + 6 months" | Upgrade dev, test and production clusters to upstream release |

Summary

Keeping multi-tenanted Kubernetes clusters up to date with upstream releases in order to obtain new features, bug fixes and security fixes can be a daunting task, especially given how frequent those releases are. However, with a clear understanding of commitments on the part of both tenant and cluster administration teams, and upgrade schedules that align with upstream’s release cycles, we believe that such upgrades can and should be treated as business-as-usual activities rather than the often-times fear-inducing major projects that they can sometimes feel like.